Practical PyTorch 101

Practical PyTorch 101

Stefan Otte | stefan.otte@gmail.com | https://nodata.science

Thanks reinforce!

(Accompanying Workshop on the 22nd at 13:30)

(Material https://github.com/sotte/pytorch_tutorial WIP)

The Tyrrany of Choice & Why?¶

What is PyTorch?¶

"PyTorch - Tensors and Dynamic neural networks in Python with strong GPU acceleration. PyTorch is a deep learning framework for fast, flexible experimentation." -- pytorch.org

- eager execution or build-by-run (not build-then-run)

- PyTorch is like numpy (on the GPU and with autograd)

- concise API surface and good abstractions

- PyTorch is just stupid Python

import torch

from IPython.core.debugger import set_trace

def my_relu(x: torch.FloatTensor) -> torch.FloatTensor:

# set_trace() # <-- this!

if x < 0:

print("if branch. x:", x)

return x * 0

return x

x = torch.tensor(-2., requires_grad=True)

res = my_relu(x)

res.backward()

print("val: ", res.item())

print("grad:", x.grad.item())

if branch. x: tensor(-2., requires_grad=True) val: -0.0 grad: 0.0

"I don't get it" vs "WOOOW!"

What you don't see:

session.run(), tf.control_dependencies(), tf.while_loop(), tf.cond(), tf.global_variables_initializer()

TensorFlow 2¶

PyTorch Features¶

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import torchvision.models

from ipdb import set_trace

DEVICE = torch.device(

"cuda:0" if torch.cuda.is_available()

else "cpu"

)

Tensors and GPU¶

a = torch.rand(3, 3)

a.shape

torch.Size([3, 3])

a + a @ a.t();

a = a.to(DEVICE)

a + a @ a.t();

a.cpu(); # a.cuda()

torch.nn¶

model = nn.Sequential(

nn.Conv2d(in_channels=1, out_channels=20, kernel_size=5),

nn.ReLU(),

nn.Conv2d(20, 64, 5),

nn.ReLU(),

nn.AdaptiveAvgPool2d(1),

)

batch = torch.rand(16, 1, 24, 24)

model(batch); # forward pass

class MyModel(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=1, out_channels=20, kernel_size=5)

self.conv2 = nn.Conv2d(20, 64, 5)

def forward(self, x):

x = F.relu(self.self.conv1(x))

x = F.relu(self.conv2(x))

return F.adaptive_avg_pool2d(x, 1)

def bn_conv_relu(in_channels, out_channels, kernel_size=5):

return nn.Sequential(

nn.BatchNorm2d(in_channels),

nn.Conv2d(in_channels, out_channels, kernel_size),

nn.ReLU(),

)

model = nn.Sequential(

OrderedDict({

"conv1": bn_conv_relu(1, 20, 7),

"conv2": bn_conv_relu(20, 64),

"aap": nn.AdaptiveAvgPool2d(1),

})

)

model

Sequential(

(conv1): Sequential(

(0): BatchNorm2d(1, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): Conv2d(1, 20, kernel_size=(7, 7), stride=(1, 1))

(2): ReLU()

)

(conv2): Sequential(

(0): BatchNorm2d(20, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): Conv2d(20, 64, kernel_size=(5, 5), stride=(1, 1))

(2): ReLU()

)

(aap): AdaptiveAvgPool2d(output_size=1)

)

# move the model to the GPU

model = model.to(DEVICE);

# nn.Linear as example of nn.Module

lin = nn.Linear(1, 3, bias=True)

print(lin)

Linear(in_features=1, out_features=3, bias=True)

lin.state_dict()

OrderedDict([('weight', tensor([[0.5689],

[0.7325],

[0.9801]])),

('bias', tensor([ 0.1557, -0.9874, 0.5309]))])

for p in lin.parameters():

print(p)

print()

Parameter containing:

tensor([[0.5689],

[0.7325],

[0.9801]], requires_grad=True)

Parameter containing:

tensor([ 0.1557, -0.9874, 0.5309], requires_grad=True)

def init_weights(module):

if isinstance(module, nn.Linear):

print(f"Initializing {module} with uniform")

nn.init.uniform_(module.weight)

lin.apply(init_weights);

Initializing Linear(in_features=1, out_features=3, bias=True) with uniform

Dataset and DataLoader¶

from torch.utils.data import Dataset, DataLoader

dataset = torchvision.datasets.MNIST("data/raw/", download=True)

len(dataset)

60000

dataset[1]

(<PIL.Image.Image image mode=L size=28x28 at 0x7FF9B45EDF98>, 0)

print(f"Label: {dataset[1][1]}")

dataset[1][0]

Label: 0

dataloader = DataLoader(

dataset,

batch_size=64,

num_workers=4,

shuffle=True,

)

model + loss + optimizer¶

model # from above

loss_fn = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.001)

optimizer = optim.SGD(

[

{"params": model.conv1.parameters(), "lr": 0.001},

{"params": model.conv2.parameters()}

],

lr=0.01,

)

Training Loop¶

def fit(model, loss_fn, optimizer, train_dl, valid_dl, n_epochs: int):

for epoch in range(n_epochs):

# TRAIN

model.train()

for x, y in train_dl:

x, y = x.to(DEVICE), y.to(DEVICE)

optimizer.zero_grad()

y_ = model(x)

loss = loss_fn(y, y_)

loss.backward()

optimizer.step()

# EVAL

model.eval()

with torch.no_grad():

for x, y in valid_dl:

x, y = x.to(DEVICE), y.to(DEVICE)

y_ = model(x)

loss = loss_fn(y, y_)

Multiple GPUs¶

model = nn.DataParallel(model)

Pre-Trained models¶

torchvision.models.resnet18(pretrained=True)

torchvision.models.densenet121(pretrained=True)

torchvision.models.inception_v3(pretrained=True)

torchvision.models.squeezenet1_1(pretrained=True);

import pretrainedmodels

pprint(sorted(pretrainedmodels.model_names))

['alexnet', 'bninception', 'cafferesnet101', 'densenet121', 'densenet161', 'densenet169', 'densenet201', 'dpn107', 'dpn131', 'dpn68', 'dpn68b', 'dpn92', 'dpn98', 'fbresnet152', 'inceptionresnetv2', 'inceptionv3', 'inceptionv4', 'nasnetalarge', 'nasnetamobile', 'pnasnet5large', 'polynet', 'resnet101', 'resnet152', 'resnet18', 'resnet34', 'resnet50', 'resnext101_32x4d', 'resnext101_64x4d', 'se_resnet101', 'se_resnet152', 'se_resnet50', 'se_resnext101_32x4d', 'se_resnext50_32x4d', 'senet154', 'squeezenet1_0', 'squeezenet1_1', 'vgg11', 'vgg11_bn', 'vgg13', 'vgg13_bn', 'vgg16', 'vgg16_bn', 'vgg19', 'vgg19_bn', 'xception']

PyTorch 1.0¶

- Focus: "from research to production"

- API mostly unchanged

- distributed backend redesigned

torch.jitto compile modelstorch.jit.tracetorch.jit.script

- LibTorch C++ frontend

- (ONNX - Open Neural Network eXchange format)

- (PyTorch -> ONNX -> whatever)

Ecosystem¶

- high-level training libs (ignite, TNT, skorch, fast.ai, PyToune, MagNet, etc.)

- AllenNLP, Flair, fairseq, Glow, Pyro, PySyft, GPyTorch

- https://colab.research.google.com/ supports PyTorch

- https://pytorch.org/ecosystem

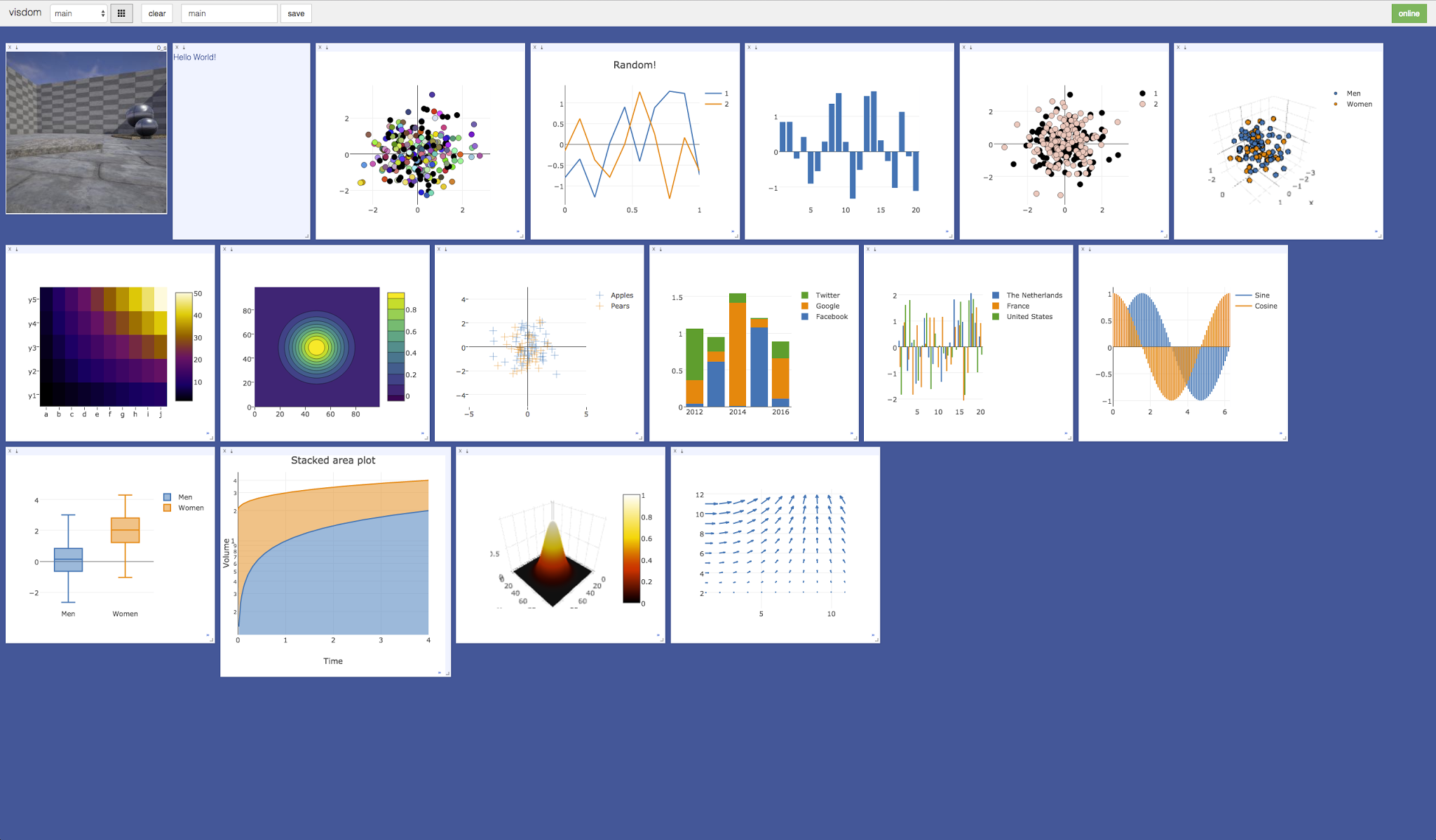

Visdom¶

Recap¶

- PyTorch is just stupid Python

- PyTorch is like numpy (on the GPU and with autograd)

- small and concise API surface

DatasetandDataLoader

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

torch.jitand ONNX

- PyTorch and TensorFlow are converging